Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

July 6, 2023

TESTBOX-370 `toHaveKey` works on queries in Lucee but not ColdFusion

TESTBOX-373 Update to `cbstreams` 2.x series for compat purposes.

A brief history of TestBox

In this section, you will find the release notes for each version we release under this major version. If you are looking for the release notes of previous major versions, use the version switcher at the top left of this documentation book. Here is a breakdown of our major version releases.

In this release, we have dropped legacy engines and added support for the BoxLang JVM language, Adobe 2023 and Lucee 6. We have also added major updates to spying and expectations. We continue in this series to focus on productivity and fluency in the Testing language in preparation for more ways to test.

In this release, we have dropped support for legacy CFML engines and introduced the ability to mock data and relationships and build JSON documents.

In this release, we focused on dropping engine supports for legacy CFML engines. We had a major breakthrough in introducing Code Coverage thanks to the folks as well. This major release also came with a new UI for all reporters and streamlined the result viewports.

This version spawned off with over 8 minor releases. We focused on taking TestBox 1 to yet a high level. Much more attention to detail and introducing modern paradigms like given-when-then. Multiple interception points, async executions, and ability to chain methods.

This was our first major version of TestBox. We had completely migrated from MXUnit, and it introduced BDD to the ColdFusion (CFML) world.

August 1, 2023

The variable thisSuite isn't defined if the for loop in the try/catch is never reached before the error. ()

New expectations: toBeIn(), toBeInWithCase() so you can verify a needle in string or array targets

New matchers and assertions: toStartWith(), toStartWithCase(), startsWith(), startsWthCase() and their appropriate negations

New matchers and assertions: toEndWith(), toEndWithCase(), endsWith(), endsWithCase()

onSpecError suiteSpecs is invalid, it's suiteStats

TESTBOX-346 expect(sut).toBeInstanceOf("something")) breaks if sut is a query

TESTBOX-374 cbstreams doesn't entirely work outside of ColdBox

TESTBOX-20 toBeInstanceOf() Expectation handle Java classes

TestBox allows you to create BDD expectations with our expectations and matcher API DSL. You start by calling our expect() method, usually with an actual value you would like to test. You then concatenate the expectation of that actual value/function to a result or what we call a matcher. You can also concatenate matchers (as of v2.1.0) so you can provide multiple matching expectations to a single value.

expect( 43 ).toBe( 42 );

expect( () => calculator.add(2,2) ).toThrow();TestBox is a next-generation testing framework based on BDD (Behavior Driven Development) and TDD (Test Driven Development), providing a clean, obvious syntax for writing tests.

TestBox is a next-generation testing framework for the BoxLang JVM language and ColdFusion (CFML) based on BDD (Behavior Driven Development) for providing a clean, obvious syntax for writing tests. It contains not only a testing framework, console/web runner, assertions, and expectations library but also ships with MockBox, A mocking and stubbing companion.

Here is a simple listing of features TestBox brings to the table:

BDD style or xUnit style testing

Testing life-cycle methods

integration for mocking and stubbing

Mocking data library for mocking JSON/complex data and relationships

TestBox is maintained under the guidelines as much as possible. Releases will be numbered in the following format:

And constructed with the following guidelines:

bumpBreaking backward compatibility bumps the major (and resets the minor and patch)

New additions without breaking backward compatibility bump the minor (and resets the patch)

Bug fixes and misc changes bump the patch

TestBox is open source and licensed under the License. If you use it, please try to mention it in your code or website.

Copyright by Ortus Solutions, Corp

TestBox is a registered trademark by Ortus Solutions, Corp

Help Group:

BoxTeam Slack :

We all make mistakes from time to time :) So why not let us know about it and help us out? We also love pull requests, so please star us and fork us:

By Jira:

TestBox is a professional open source software backed by offering services like:

Custom Development

Professional Support & Mentoring

Training

Server Tuning

Official Site:

Current API Docs:

Help Group:

Source Code:

Because of His grace, this project exists. If you don't like this, don't read it, it's not for you.

Therefore being justified by faith, we have peace with God through our Lord Jesus Christ: By whom also we have access by faith into this grace wherein we stand, and rejoice in hope of the glory of God. - Romans 5:5

Specs and suites can be focused so ONLY those suites and specs execute. You will do this by prefixing certain functions with the letter f or by using the focused argument in each of them. The reporters will show that these suites or specs where execute ONLY The functions you can prefix are:

it()

describe()

story()

given()

when()

then()

feature()

Please note that if a suite is focused, then all of its children will execute.

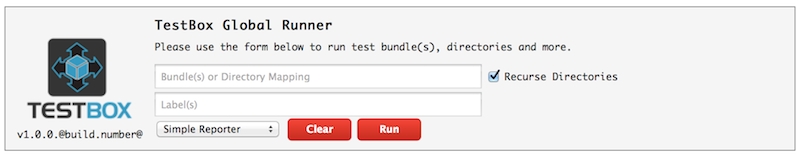

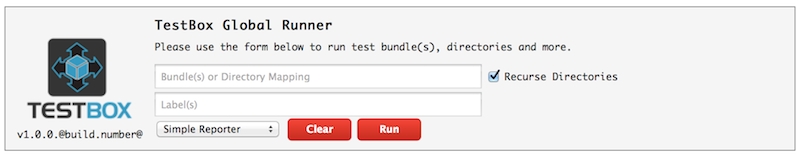

TestBox ships with a global runner that can be used to run pretty much anything. You can customize it or place it wherever you need it. You can find it in your distribution under:

BoxLang: /testbox/bx/test-browser

CFML: /testbox/cfml/test-browser

This is a mini web application to help you run bundles, directory, specs and more.

Legacy Compatibility

TestBox is fully compliant with xUnit test cases. In order to leverage it you will need to create or override the /mxunit mapping and make it point to the /testbox/system/compat folder. That's it, everything should continue to work as expected.

Note you will still need TestBox to be in the web root, or have a

/testboxmapping created even when using the MXUnit compat runner.

After this, all your test code remains the same but it will execute through TestBox's xUnit runners. You can even execute them via the normal URL you are used to. If there is something that is not compatible, please let us know and we will fix it.

Luis Majano is a Computer Engineer with over 16 years of software development and systems architecture experience. He was born in in the late 70’s, during a period of economical instability and civil war. He lived in El Salvador until 1995 and then moved to Miami, Florida where he completed his Bachelors of Science in Computer Engineering at . Luis resides in The Woodlands, Texas with his beautiful wife Veronica, baby girl Alexia and baby boy Lucas!

He is the CEO of , a consulting firm specializing in web development, ColdFusion (CFML), Java development and all open source professional services under the ColdBox and ContentBox stack. He is the creator of ColdBox, ContentBox, WireBox, MockBox, LogBox and anything “BOX”, and contributes to many open source ColdFusion projects. He is also the Adobe ColdFusion user group manager for the

In addition to the life-cycle methods according to your style, you can make any method a life-cycle method by giving it the desired annotation in its function definition. This is especially useful for parent classes that want to hook in to the TestBox life-cycle.

@beforeAll - Executes once before all specs for the entire test bundle CFC

@afterAll - Executes once after all specs complete in the test bundle CFC

This is more of an approach than an actual specifc runner. This approach shows you that you can create a script file in BoxLang (bxs) or in CFML (cfs|cfm) that can in turn execute any test bundle(s) with many many runnable configurations.

The BoxLang language allows you to run your scripts via the CLI or the browser if you have a web server attached to your project.

If you want to run it in the CLI, then just use:

If you want to run it via the web server, place it in your /tests/

Tests and suites can be tagged with TestBox labels. Labels allows you to further categorize different tests or suites so that when a runner executes with labels attached, only those tests and suites will be executed, the rest will be skipped. Labels can be applied globally to the component declaration of the test bundle suite or granularly at the test method declaration.

TestBox not only provides you with global life-cycle methods but also with localized test methods. This is a great way to keep your tests DRY (Do not repeat yourself)!

beforeTests() - Executes once before all tests for the entire test bundle CFC

afterTests() - Executes once after all tests complete in the test bundle CFC

The approach that we take with MockBox is a dynamic and minimalistic approach. Why dynamic? Well, because we dynamically transform target objects into mock form at runtime. The API for the mocking factory is very easy to use and provides you a very simplistic approach to mocking.

We even use $()style method calls so you can easily distinguish when using or mocking methods, properties, etc. So what can MockBox do for me?

Create mock objects for you and keep their methods intact (Does not wipe methods, so you can do method spys, or mock helper methods)

Create mock objects and wipe out their method signatures

As you can see from our arguments for a test suite, you can pass an asyncAll argument to the describe() blocks that will allow TestBox to execute all specs in separate threads for you concurrently.

Caution Once you delve into the asynchronous world you will have to make sure your tests are also thread safe (var-scoped) and provide any necessary locking.

You can pass in an argument called data , which is a struct of dynamic data, to all life-cycle methods. This is useful when creating dynamic suites and specifications. This data will then be passed into the executing body for each life-cycle method for you.

Here is a typical example:

This method can only be used in conjunction with $() as a chained call as it needs to know for what method are the results for.

The purpose of this method is to make a method return more than 1 result in a specific repeating sequence. This means that if you set the mock results to be 2 results and you call your method 4 times, the sequence will repeat itself 1 time. MUMBO JUMBO, show me!! Ok Ok, hold your horses.

As you can see, the sequence repeats itself once the call counter increases. Let's say that you have a test where the first call to a user object's isAuthorized() method is false but then it has to be true. Then you can do this:

The factory takes in one constructor argument that is not mandatory: generationPath. This path is a relative path of where the factory generates internal mocking stubs that are included later on at runtime. Therefore, the path must be a path that can be used using cfinclude. The default path the mock factory uses is the following, so you do not have to specify one, just make sure the path has WRITE permissions:

Hint If you are using Lucee or ACF10+ you can also decide to use the

ram://resource and place all generated stubs in memory.

This method can help you retrieve any public or private internal state variable so you can do assertions. You can also pass in a scope argument so you can not only retrieve properties from the variables scope but from any nested structure inside of any private scope:

Parameters:

name - The name of the property to retrieve

scope - The scope where the property lives in. The default is variables scope.

This method is used for debugging purposes. If you would like to get a structure of all the mocking internals of an object, just call this method and it will return to you a structure of data that you can dump for debugging purposes.

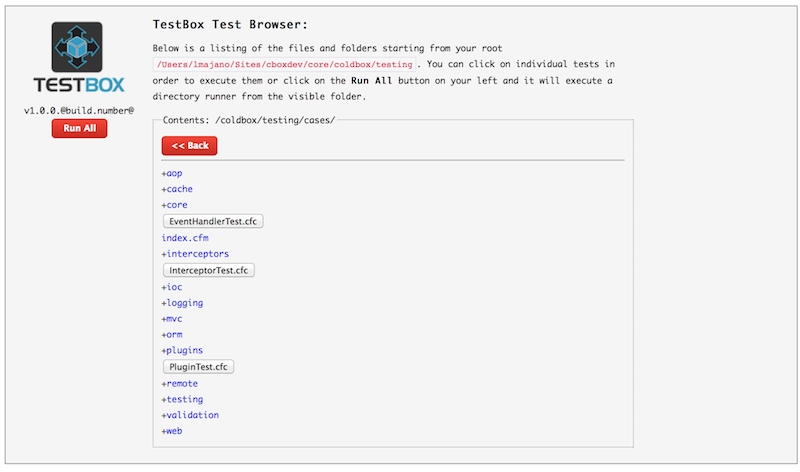

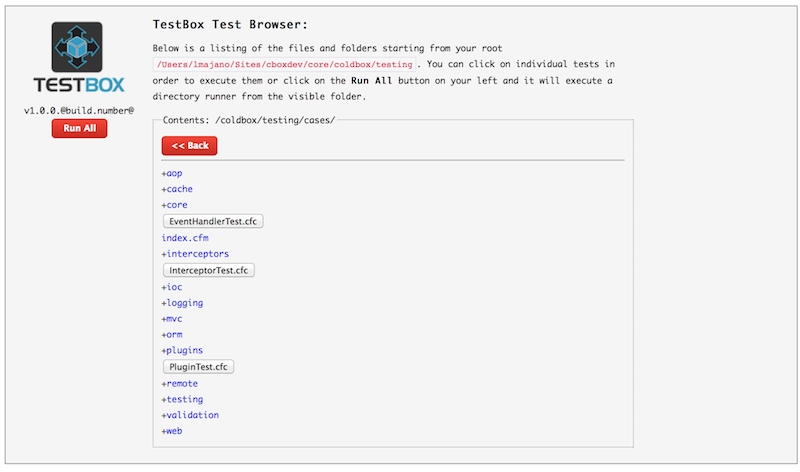

TestBox ships with a test browser that is highly configurable to whatever URL accessible path you want. It will then show you a test browser where you can navigate and execute not only individual tests, but also directory suites as well.

BoxLang: /testbox/bx/test-browser

CFML: /testbox/cfml/test-browser

It is also a mini web application that can be configured to whatever root folder you desire. It will read the runners and tests from that folder and present a GUI that you can use to navigate the test folders and execute them easily.

class{

function run(){

describe( "My calculator features", () => {

beforeEach( () => {

variables.calc = new Calculator()

} )

// Using expectations library

it( "can add", () => {

expect( calc.add(1,1) ).toBe( 2 )

} )

// Using assert library

test( "it can multiply", () => {

assertIsEqual( calc.multiply(2,2), 4 )

} )

} )

}

}/**

* My calculator features

*/

class{

property calc;

function setup(){

calc = new Calculator()

}

// Function name includes the word 'test'

// Using expectations library

function testAdd(){

expect( calc.add(1,1) ).toBe( 2 )

}

// Any name, but with a test annotation

// Using assertions library

@test

function itCanMultiply(){

$assert.isEqual( calc.multiply(2,2), 4 )

}

}Create stub objects for objects that don't even exist yet. So you can build to interfaces and later build dependencies.

Decorate instantiated objects with mocking capabilities (So you can mock targeted methods and properties; spys)

Mock internal object properties, basically do property injections in any internal scope

State-Machine Results. Have a method recycle the results as it is called consecutively. So if you have a method returning two results and you call the method 4 times, the results will be recycled: 1,2,1,2

Method call counter, so you can keep track of how many times a method has been called

Method arguments call logging, so you can keep track of method calls and their arguments as they are called. This is a great way to find out what was the payload when calling a mocked method

Ability to mock results depending on the argument signatures sent to a mocked method with capabilities to even provide state-machine results

Ability to mock private/package methods

Ability to mock exceptions from methods or make a method throw a controlled exception

Ability to change the return type of methods or preserve their signature at runtime, extra cool when using stubs that still have no defined signature

Ability to call a debugger method ($debug()) on mocked objects to retrieve extra debugging information about its mocking capabilities and its mocked calls

fstory( "A spec", function() {

it("was just skipped, so I will never execute", function() {

coldbox = 0;

coldbox++;

expect( coldbox ).toBe( 1 );

});

});

describe("A spec", function() {

it("is just a closure, so it can contain any code", function() {

coldbox = 0;

coldbox++;

expect( coldbox ).toBe( 1 );

});

fit("can have more than one expectation, but I am skipped", function() {

coldbox = 0;

coldbox++;

expect( coldbox ).toBe( 1 );

expect( coldbox ).toBeTrue();

});

});We also support in the compatibility mode the expected exception MXUnit annotation: mxunit:expectedException and the expectException() methods. The expectException() method is not part of the assertion library, but instead is inherited from our BaseSpec.cfc.

Please refer to MXUnit's documentation on the annotation and method for expected exceptions, but it is supported with one caveat. The expectException() method can produce unwanted results if you are running your test specs in TestBox asynchronous mode since it stores state at the component level. Only synchronous mode is supported if you are using the expectException() method. The annotation can be used in both modes.

this.mappings[ "/mxunit" ] = expandPath( "/testbox/system/compat" );component displayName="TestBox xUnit suite" labels="railo,stg,dev"{

function setup(){

application.wirebox = new coldbox.system.ioc.Injector();

structClear( request );

}

function teardown(){

structDelete( application, "wirebox" );

structClear( request );

}

function testThrows(){

$assert.throws(function(){

var hello = application.wirebox.getInstance( "myINvalidService" ).run();

});

}

function testNotThrows(){

$assert.notThrows(function(){

var hello = application.wirebox.getInstance( "MyValidService" ).run();;

});

}

function testFailsShortcut() labels="dev"{

fail( "This Test should fail when executed with labels" );

}

}describe(title="A spec (with setup and tear-down)", asyncAll=true, body=function() {

beforeEach(function() {

coldbox = 22;

application.wirebox = new coldbox.system.ioc.Injector();

});

afterEach(function() {

coldbox = 0;

structDelete( application, "wirebox" );

});

it("is just a function, so it can contain any code", function() {

expect( coldbox ).toBe( 22 );

});

it("can have more than one expectation and talk to scopes", function() {

expect( coldbox ).toBe( 22 );

expect( application.wirebox.getInstance( 'MyService' ) ).toBeComponent();

});

});beforeEach(

data = { mydata="luis" },

body = function( currentSpec, data ){

// The arguments.data is binded via the `data` snapshot above.

data.myData == "luis";

}

);describe( "Ability to bind data to life-cycle methods", function(){

var data = [

"spec1",

"spec2"

];

for( var thisData in data ){

describe( "Trying #thisData#", function(){

beforeEach(

data : { myData = thisData },

body : function( currentSpec, data ){

targetData = arguments.data.myData;

});

it(

title : "should account for life-cycle data binding",

data : { myData = thisData },

body : function( data ){

expect( targetData ).toBe( data.mydata );

}

);

afterEach(

data : { myData = thisData },

body : function( currentSpec, data ){

targetData = arguments.data.myData;

});

});

}

for( var thisData in data ){

describe( "Trying around life-cycles with #thisData#", function(){

aroundEach(

data : { myData = thisData },

body : function( spec, suite, data ){

targetData = arguments.data.myData;

arguments.spec.body( data=arguments.spec.data );

});

it(

title : "should account for life-cycle data binding",

data : { myData = thisData },

body : function( data ){

expect( targetData ).toBe( data.mydata );

});

});

}

});$(...).$results(...)//Mock 3 values for the getSetting method

controller.$("getSetting").$results(true,"cacheEnabled","myapp.model");

//Call getSetting 1

<cfdump var="#controller.getSetting()#">

Results = true

//Call getSetting 2

<cfdump var="#controller.getSetting()#">

Results = "cacheEnabled"

//Call getSetting 3

<cfdump var="#controller.getSetting()#">

Results = "myapp.model"

//Call getSetting 4

<cfdump var="#controller.getSetting()#">

Results = true

//Call getSetting 5

<cfdump var="#controller.getSetting()#">

Results = "cacheEnabled"mockUser = getMockBox().createMock("model.User");

mockUser.$("isAuthorized").$results(false,true);mockBox = new testbox.system.MockBox();

// Within a TestBox Spec

getMockBox()/testbox/system/stubsany $getProperty(name [scope='variables']expect( model.$getProperty("dataNumber", "variables") ).toBe( 4 );

expect( model.$getProperty("name", "variables.instance") ).toBe( "Luis" );<cfdump var="#targetObject.$debug()#">This method is NOT injected into mock objects but avaialble via MockBox directly in order to create queries very quickly. This is a great way to simulate cfquery calls, cfdirectory or any other cf tag that returns a query.

function testGetUsers(){

// Mock a query

mockQuery = mockBox.querySim("id,fname,lname

1 | luis | majano

2 | joe | louis

3 | bob | lainez");

// tell the dao to return this query

mockDAO.$("getUsers", mockQuery);

}Specs and suites can be tagged with TestBox labels. Labels allows you to further categorize different specs or suites so that when a runner executes with labels attached, only those specs and suites will be executed, the rest will be skipped. You can alternatively choose to skip specific labels when a runner executes with excludes attached.

describe(title="A spec", labels="stg,railo", body=function() {

it("executes if its in staging or in railo", function() {

coldbox = 0;

coldbox++;

expect( coldbox ).toBe( 1 );

});

});

describe("A spec", function() {

it("is just a closure, so it can contain any code", function() {

coldbox = 0;

coldbox++;

expect( coldbox ).toBe( 1 );

});

it(title="can have more than one expectation and labels", labels="dev,stg,qa,shopping", body=function() {

coldbox = 0;

coldbox++;

expect( coldbox ).toBe( 1 );

expect( coldbox ).toBeTrue();

});

});This method is a quick notation for the $times(0) call but more expressive when written in code:

Boolean $never([methodname])Parameters:

* methodName - The optional method name to assert the number of method calls

Examples:

security = getMockBox().createMock("model.security");

//No calls yet

expect( security.$never() ).toBeTrue();

security.$("isValidUser",false);

security.isValidUser();

// Asserts

expect( security.$never("isValidUser") ).toBeFalse();You can prefix your expectation with the not operator to easily cause negative expectations for any matcher. When you read the API Docs or the source, you will find nowhere the not methods. This is because we do this dynamically by convention.

expect( actual )

.notToBe( 4 )

.notToBeTrue();

.notToBeFalse();@beforeEach - Executes before every single spec in a single describe block and receives the currently executing spec.@afterEach - Executes after every single spec in a single describe block and receives the currently executing spec.

@aroundEach - Executes around the executing spec so you can provide code surrounding the spec.

Below are several examples using script notation.

DBTestCase.cfc (parent class)

PostsTest.cfc

This also helps parent classes enforce their setup methods are called by annotating the methods with @beforeAll. No more forgetting to call super.beforeAll()!

component extends="coldbox.system.testing.BaseTestCase"{

/**

* @aroundEach

*/

function wrapInDBTransaction( spec, suite ){

transaction action="begin" {

try {

arguments.spec.body();

} catch (any e) {

rethrow;

} finally {

transaction action="rollback"

}

}

}

}CFML engines only allow you to run tests via the browser. So create your script, place it in your web accessible /tests folder and run it.

// Test the BDD Bundle

r = new testbox.system.TestBox( "tests.specs.BDDTest" )

println( r.run() );

// Test the bundle with ONLY the passed specs

r = new testbox.system.TestBox( "tests.specs.BDDTest" )

println( r.run( testSpecs="OnlyThis,AndThis,AndThis" ) )

// Test the bundle with ONLY the passed suites

r = new testbox.system.TestBox( "tests.specs.BDDTest" )

println( r.run( testSuites="Custom Matchers,A Spec" ) )

// Test the passed array of bundles

r = new testbox.system.TestBox( [ "tests.specs.BDDTest", "tests.specs.BDD2Test" ] )

println( r.run() )

// Test with labels and the minimal reporter

r = new testbox.system.TestBox( bundles: "tests.specs.BDDTest", labels="linux" )

println( r.run( reporter: "mintext" ) )boxlang run.bxssetup( currentMethod ) - Executes before every single test case and receives the name of the actual testing methodteardown( currentMethod ) - Executes after every single test case and receives the name of the actual testing method

Examples

component{

function beforeTests(){}

function afterTests(){}

function setup( currentMethod ){}

function teardown( currentMethod ){}

}component displayName="TestBox xUnit suite" labels="railo,cf"{

function setup( currentMethod ){

application.wirebox = new coldbox.system.ioc.Injector();

structClear( request );

}

function teardown( currentMethod ){

structDelete( application, "wirebox" );

structClear( request );

}

function testThrows(){

$assert.throws(function(){

var hello = application.wirebox.getInstance( "myINvalidService" ).run();

});

}

function testNotThrows(){

$assert.notThrows(function(){

var hello = application.wirebox.getInstance( "MyValidService" ).run();;

});

}

}component extends="DBTestCase"{

/**

* @beforeEach

*/

function setupColdBox() {

setup();

}

function run() {

given( "I have a two posts", function(){

when( "I visit the home page", function(){

then( "There should be two posts on the page", function(){

queryExecute( "INSERT INTO posts (body) VALUES ('Test Post One')" );

queryExecute( "INSERT INTO posts (body) VALUES ('Test Post Two')" );

var event = execute( event = "main.index", renderResults = true );

var content = event.getCollection().cbox_rendered_content;

expect(content).toMatch( "Test Post One" );

expect(content).toMatch( "Test Post Two" );

});

});

});

}

}http://localhost/tests/run.bxs<cfscript>

// Test the BDD Bundle

r = new testbox.system.TestBox( "tests.specs.BDDTest" )

writeOutput( r.run() );

// Test the bundle with ONLY the passed specs

r = new testbox.system.TestBox( "tests.specs.BDDTest" )

writeOutput( r.run( testSpecs="OnlyThis,AndThis,AndThis" ) )

// Test the bundle with ONLY the passed suites

r = new testbox.system.TestBox( "tests.specs.BDDTest" )

writeOutput( r.run( testSuites="Custom Matchers,A Spec" ) )

// Test the passed array of bundles

r = new testbox.system.TestBox( [ "tests.specs.BDDTest", "tests.specs.BDD2Test" ] )

writeOutput( r.run() )

// Test with labels and the minimal reporter

r = new testbox.system.TestBox( bundles: "tests.specs.BDDTest", labels="linux" )

writeOutput( r.run( reporter: "mintext" ) )

</cfscript>Ability to extend and create custom test runners and reporters

Extensible reporters, bundled with tons of them:

JSON

XML

JUnit XML

Text

Console

TAP ()

Simple HTML

Min - Minimalistic Heaven

Raw

CommandBox

Asynchronous testing

Multi-suite capabilities

Test skipping

Test labels and tagging

Testing debug output stream

Code Coverage via FusionReactor

Much more!

Security Hardening

Code Reviews

Bug Tracker: https://ortussolutions.atlassian.net/browse/TESTBOX

Twitter: @ortussolutions

Facebook: https://www.facebook.com/ortussolutions

Luis has a passion for Jesus, tennis, golf, volleyball and anything electronic. Random Author Facts:

He played volleyball in the Salvadorean National Team at the tender age of 17

The Lord of the Rings and The Hobbit is something he reads every 5 years. (Geek!)

His first ever computer was a Texas Instrument TI-86 that his parents gave him in 1986. After some time digesting his very first BASIC book, he had written his own tic-tac-toe game at the age of 9. (Extra geek!)

He has a geek love for circuits, microcontrollers and overall embedded systems.

He has of late (during old age) become a fan of running and bike riding with his family.

Keep Jesus number one in your life and in your heart. I did and it changed my life from desolation, defeat and failure to an abundant life full of love, thankfulness, joy and overwhelming peace. As this world breathes failure and fear upon any life, Jesus brings power, love and a sound mind to everybody!

“Trust in the LORD with all your heart, and do not lean on your own understanding.” Proverbs 3:5

Jorge is an Industrial and Systems Engineer born in El Salvador. After finishing his Bachelor studies at the Monterrey Institute of Technology and Higher Education ITESM, Mexico, he went back to his home country where he worked as the COO of Industrias Bendek S.A.. In 2012 he left El Salvador and moved to Switzerland in persuit of the love of his life. He married her and today he resides in Basel with his lovely wife Marta and their daughter Sofía.

Jorge started working as project manager and business developer at Ortus Solutions, Corp. in 2013, . At Ortus he fell in love with software development and now enjoys taking part on software development projects and software documentation! He is a fellow Cristian who loves to play the guitar, worship and rejoice in the Lord!

Therefore, if anyone is in Christ, the new creation has come: The old has gone, the new is here! 2 Corinthians 5:17

Write capabilities on disk for the default path of /testbox/system/testings/stubs.

You can also choose the directory destination for stub creations yourself when you initialize TestBox. If using ColdFusion 9 or Lucee you can even use ram:// and use the virtual file system.

The testing bundle CFC is actually the suite in xUnit style as it contains all the test methods you would like to test with. Usually, this CFC represents a test case for a specific software under test (SUT), whether that's a model object, service, etc. This component can have some cool annotations as well that can alter its behavior.

component displayName="The name of my suite" asyncAll="boolean" labels="list" skip="boolean"{

}TestBox relies on the fact of creating testing bundles which are basically CFCs. A bundle CFC will hold all the tests the TestBox runner will execute and produce reports on. Thus, sometimes this test bundle is referred to as a test suite in xUnit terms.

Argument

Caution If you activate the

asyncAllflag for asynchronous testing, you HAVE to make sure your tests are also thread safe and appropriately locked.

Learn about the authors of TestBox and how to support the project.

The source code for this book is hosted on GitHub: https://github.com/Ortus-Solutions/testbox-docs. You can freely contribute to it and submit pull requests. The contents of this book is copyrighted by Ortus Solutions, Corp and cannot be altered or reproduced without the author's consent. All content is provided "As-Is" and can be freely distributed.

Flash, Flex, ColdFusion, and Adobe are registered trademarks and copyrights of Adobe Systems, Inc.

BoxLang, ColdBox, CommandBox, FORGEBOX, TestBox, ContentBox, and Ortus Solutions are all trademarks and copyrights of Ortus Solutions, Corp.

The information in this book is distributed “as is” without warranty. The author and Ortus Solutions, Corp shall not have any liability to any person or entity concerning loss or damage caused or alleged to be caused directly or indirectly by the content of this training book, software, and resources described in it.

We highly encourage contributions to this book and our open-source software. The source code for this book can be found in our where you can submit pull requests.

15% of the proceeds of this book will go to charity to support orphaned kids in El Salvador - . So please donate and purchase the printed version of this book; every book sold can help a child for almost 2 months.

Shalom Children’s Home () is one of the ministries that are dear to our hearts located in El Salvador. During the 12-year civil war that ended in 1990, many children were left orphaned or abandoned by parents who fled El Salvador. The Benners saw the need to help these children and received 13 children in 1982. Little by little, more children came on their own, churches and the government brought children to them for care, and the Shalom Children’s Home was founded.

Shalom now cares for over 80 children in El Salvador, from newborns to 18 years old. They receive shelter, clothing, food, medical care, education, and life skills training in a Christian environment. The home is supported by a child sponsorship program.

We have personally supported Shalom for over 6 years now; it is a place of blessing for many children in El Salvador who either has no families or have been abandoned. This is a good earth to seed and plant.

A modern editor can enhance your testing experience. We recommend VSCode due to the extensive modules library. Here are the plugins we maintain for each platform.

The VSCode plugin is the best way for you to interact with TestBox alongside the BoxLang plugin. It allows you to run tests, generate tests, navigate tests and much more.

If you are creating runners and want to tap into the runner listeners or callbacks, you can do so by creating a class or a struct with the different events we announce.

onBundleStart

When each bundle begins execution

onBundleEnd

When each bundle ends execution

onSuiteStart

Before a suite (describe, story, scenario, etc)

onSuiteEnd

After a suite

Every run and runRaw methods accepts a callbacks argument, which can be a Class with the right listener methods or a struct with the right closure methods. This will allow you to listen to the testing progress and get information about it. This way you can build informative reports or progress bars.

TestBox comes also with a nice plethora of reporters:

ANTJunit : A specific variant of JUnit XML that works with the ANT junitreport task

Codexwiki : Produces MediaWiki syntax for usage in Codex Wiki

Console : Sends report to console

Doc : Builds semantic HTML to produce nice documentation

Dot : Builds an awesome dot report

JSON : Builds a report into JSON

JUnit : Builds a JUnit compliant report

Raw : Returns the raw structure representation of the testing results

Simple : A basic HTML reporter

Text : Back to the 80's with an awesome text report

XML : Builds yet another XML testing report

Tap : A test anything protocol reporter

Min : A minimalistic view of your test reports

MinText : A minimalistic view of your test reports for consoles

NodeJS : User-contributed:

A Test Bundle is a CFC

TestBox relies on the fact of creating testing bundles which are basically CFCs. A bundle CFC will hold all the suites and specs a TestBox runner will execute and produce reports on. Don't worry, we will cover what's a suite and a spec as well. Usually they will have a name that ends with *Spec or *Test.

component extends="testbox.system.BaseSpec"{

// executes before all suites

function beforeAll(){}

// executes after all suites

function afterAll(){}

// All suites go in here

function run( testResults, testBox ){

}

}This bundle CFC can contain 2 life-cycle functions and a single run() function where you will write your test suites and specs.

The beforeAll() and afterAll() methods are called life-cycle methods. They will execute once before the run() function and once after the run() function. This is a great way to do any global setup or tear down in your tests.

The run() function receives the TestBox testResults object as a reference and testbox as a reference as well. This way you can have metadata and access to what will be reported to users in a reporter. You can also use it to decorate the results or store much more information that reports can pick up later. You also have access to the testbox class so you can see how the test is supposed to execute, what labels was it passed, directories, options, etc.

There is a user-contributed NodeJS Runner that looks fantastic and can be downloaded here: https://www.npmjs.com/package/testbox-runner

You can use node to install as well into your projects.

npm install -g testbox-runnerCreate a config file called .testbox-runnerrc in the root of your web project.

{

"runner": "http://localhost/testbox/system/runners/HTMLRunner.cfm",

"directory": "/tests/specs",

"recurse": true

}Then use the CLI command to run whatever you configured.

testbox-runner

You can also specify a specific configuration file:

testbox-runner --config /path/to/config/file.json

Simply run the utility and pass the above configuration options prefixed with --.

Example

TestBox comes with a plethora of assertions that cover what we believe are common scenarios. However, we recommend that you create custom assertions that meet your needs and criteria so that you can avoid duplication and have re-usability. A custom assertion function can receive any amount of arguments but it must use the fail() method in order to fail an assertion or just return true or void for passing.

Here is an example:

You can register assertion functions in several ways within TestBox, but we always recommend that you register them inside of the beforeTests()

BDD stands for Behavioral Driven Development. It is a software development process that aims to improve collaboration between developers, testers, and business stakeholders. BDD involves creating automated tests that are based on the expected behavior of the software, rather than just testing individual code components. This approach helps ensure that the software meets the desired functionality and is easier to maintain and update in the future.

In traditional xUnit, you focused on every component's method individually. In BDD, we will focus on a feature or story to complete, which could include testing many different components to satisfy the criteria. TestBox allows us to create these types of texts with human-readable functions matching our features/stories and expectations.

Every test harness comes with a runner.bx or runner.cfm in the root of the tests folder. This is called the web runner and is executable via the web server you are running your application on. This will execute all the tests by convention found in the tests/specs folder.

You can open that file and customize it as you see fit. Here is an example of such a file:

Running tests is essential of course. There are many ways to run your tests, we will see the basics here, and you can check out our section in our in-depth guide.

The easiest way to run your tests is to use the TestBox CLI via the testbox run command. Ensure you are in the web root of your project or have configured the box.json to include the TestBox runner in it as shown below. If not CommandBox will try to run by convention your site + test/runner.cfm for you.

In order to use TestBox Code Coverage, you will need TestBox 3.x or higher installed, a licensed installation of and a working test suite. You may have some or all of these already so skip the sections that don't apply to you.

If you don't have FusionReactor installed, you can do so very easily in CommandBox like so:

That's it! All servers you start now will have FusionReactor configured. You can open FusionReactor's web console via the menu item in your server's tray icon. Note, the FusionReactor web admin is not required to get TestBox code coverage.

If you are not using CommandBox for your server, follow the on FusionReactor's website. If you need a license key, please

When writing tests for an app or library, it's generally regarded that more tests is better since you're covering more functionality and more likely to catch regressions as they happen. This is true, but more specifically, it's important that your tests run as much code in your project as possible. Tests obviously can't check code that they doesn't run!

With BDD, there is not a one-to-one correlation between a test and a unit of code. You may have a test for your login page, but how do you know if all the else blocks in your if statements or case blocks in your switch statements were run? Was your error routine tested? What about optional features, or code that only kicks in on the 3rd Tuesday of months in the springtime? These can be difficult questions to answer just by staring at the results of your tests. The answer to this is Code Coverage.

The toBe() matcher represents an equality matcher much how an $assert.isEqual() behaves. Below are several of the most common matchers available to you. However, the best way to see which ones are available is to checkout the .

TestBox supports the concept of to allow for validations and for legacy tests. We encourage developers to use our BDD expectations as they are more readable and fun to use (Yes, fun I said!).

The assertions are modeled in the class testbox.system.Assertion, so you can visit the for the latest assertions available. Each test bundle will receive a variable called $assert which represents the assertions object.

If you are running and testing with BoxLang, you will have the extra benefit of the assertions dynamic methods. This allows you to just called the method in the

The following methods are also mixed in at run-time into mock objects and they will be used to verify behavior and calls from these mock/stub objects. These are great in order to find out how many mocked methods calls have been made and to find out the arguments that where passed to each mocked method call.

TestBox comes also with a nice plethora of reporters:

ANTJunit : A specific variant of JUnit XML that works with the ANT junitreport task

Codexwiki : Produces MediaWiki syntax for usage in Codex Wiki

Console : Sends report to console

This method is used in order to mock an internal property on the target object. Let's say that the object has a private property of userDAO that lives in the variables scope and the lifecycle for the object is controlled by its parent, in this case the user service. This means that this dependency is created by the user service and not injected by an external force or dependency injection framework. How do we mock this? Very easily by using the $property() method on the target object.

Parameters:

propertyName - The name of the property to mock

propertyScope - The scope where the property lives in. The default is variables scope.

This method can help you verify that at least a minimum number of calls have been made to all mocked methods or a specific mocked method.

Parameters:

minNumberOfInvocations - The min number of calls to assert

methodName - The optional method name to assert the number of method calls

This method can help you verify that only ONE mocked method call has been made on the entire mock or a specific mocked method. Useful alias!

Parameters:

methodName - The optional method name to assert the number of method calls

Examples:

This method is used to retrieve a structure of method calls that have been made on mocked methods of the mock object. This is extermely useful when you want to assert that a certain method was called with the appropriate arguments. Great for testing method calls that save or update data to some kind of persistent storage. Also great to find out what was the state of the data of a call at certain points in time.

Each mocked method is a key in the structure that contains an array of calls. Each array element can have 0 or more arguments that are traced when methods where called with arguments. If they where made with ordered or named arguments, you will be able to know the difference. We recommend dumping out the structure to check out its composition.

Examples:

This method is used to tell MockBox that you want to mock a method with a SPECIFIC number of argument calls. Then you will have to set the return results for it, but this is absolutely necessary if you need to test an object that makes several method calls to the same method with different arguments, and you need to mock different results coming back. Example, let's say you are using a ColdBox configuration bean that holds configuration data. You make several calls to the getKey() method with different arguments:

How in the world can I mock this? Well, using the mock arguments method.

Hint So remember that if you use the

$args()call, you need to tell it what kind of results you are expecting by calling the$results()method after it or you might end up with an exception.

If the method you are mocking is called using named arguments then you can mock this using:

Spy like us!

MockBox now supports a $spy( method ) method that allows you to spy on methods with all the call log goodness but without removing all the methods. Every other method remains intact, and the actual spied method remains active. We decorate it to track its calls and return data via the $callLog() method.

Example of CUT:

Example Test:

Our default syntax for expecting exceptions is to use our closure approach concatenated with our toThrow() method in our expectations or our throws() method in our assertions object.

Info Please always remember to pass in a closure to these methods and not the actual test call:

function(){ myObj.method();}

Example

This will execute the closure in a nested try/catch block and make sure that it either threw an exception, threw with a type, threw with a type and a regex match of the exception message. If you are in an environment that does not support closures then you will need to create a spec testing function that either uses the expectedException

Get the number of times a method has been called or the entire number of calls made to ANY mocked method on this mock object. If the method has never been called, you will receive a 0. If the method does not exist or has not been mocked, then it will return a -1.

Parameters:

methodName - Name of the method to get the counter for (Optional)

This method is a utility method used to clear out all call logging and method counters.

component{

function run(){

describe( "My calculator features", () => {

beforeEach( () => {

variables.calc = new Calculator()

} );

// Using expectations library

it( "can add", () => {

expect( calc.add(1,1) ).toBe( 2 )

} );

// Using assert library

test( "it can multiply", () => {

$assert.isEqual( calc.multiply(2,2), 4 )

} );

} );

}

}/**

* My calculator features

*/

component{

property calc;

function setup(){

calc = new Calculator()

}

// Function name includes the word 'test'

// Using expectations library

function testAdd(){

expect( calc.add(1,1) ).toBe( 2 )

}

// Any name, but with a test annotation

// Using assertions library

function itCanMultiply() test{

$assert.isEqual( calc.multiply(2,2), 4 )

}

}<major>.<minor>.<patch>component displayName="My test suite" extends="testbox.system.BaseSpec"{

// executes before all tests

function beforeTests(){}

// executes after all tests

function afterTests(){}

}Doc : Builds semantic HTML to produce nice documentation

Dot : Builds an awesome dot report

JSON : Builds a report into JSON

JUnit : Builds a JUnit compliant report

Raw : Returns the raw structure representation of the testing results

Simple : A basic HTML reporter

Text : Back to the 80's with an awesome text report

XML : Builds yet another XML testing report

Tap : A test anything protocol reporter

Min : A minimalistic view of your test reports

MinText : A minimalistic view of your test reports in consoles

NodeJS : User-contributed: https://www.npmjs.com/package/testbox-runner

Required

Default

Type

Description

displayName

false

--

string

If used, this will be the name of the test suite in the reporters.

asyncAll

false

false

boolean

If true, it will execute all the test methods in parallel and join at the end asynchronously.

labels

false

---

string/list

The list of labels this test belongs to

skip

false

false

boolean/udf

A boolean flag that makes the runners skip the test for execution. It can also be the name of a UDF in the same CFC that will be executed and MUST return a boolean value.

Please refer to our MockBox section to take advantage of all the mocking and stubbing you can do. However, every BDD TestBundle has the following functions available to you for mocking and stubbing purposes:

makePublic( target, method, newName ) - Exposes private methods from objects as public methods

querySim( queryData ) - Simulate a query

getMockBox( [generationPath] ) - Get a reference to MockBox

createEmptyMock( [className], [object], [callLogging=true]) - Create an empty mock from a class or object

createMock( [className], [object], [clearMethods=false], [callLogging=true]) - Create a spy from an instance or class with call logging

prepareMock( object, [callLogging=true]) - Prepare an instance of an object for method spies with call logging

createStub( [callLogging=true], [extends], [implements]) - Create stub objects with call logging and optional inheritance trees and implementation methods

getProperty( target, name, [scope=variables], [defaultValue] ) - Get a property from an object in any scope

Please refer to our MockBox section to take advantage of all the mocking and stubbing you can do. However, every BDD TestBundle has the following functions available to you for mocking and stubbing purposes:

makePublic( target, method, newName ) - Exposes private methods from objects as public methods

querySim( queryData ) - Simulate a query

getMockBox( [generationPath] ) - Get a reference to MockBox

createEmptyMock( [className], [object], [callLogging=true]) - Create an empty mock from a class or object

createMock( [className], [object], [clearMethods=false], [callLogging=true]) - Create a spy from an instance or class with call logging

prepareMock( object, [callLogging=true]) - Prepare an instance of an object for method spies with call logging

createStub( [callLogging=true], [extends], [implements]) - Create stub objects with call logging and optional inheritance trees and implementation methods

getProperty( target, name, [scope=variables], [defaultValue] ) - Get a property from an object in any scope

Boolean

Assert that no interactions have been made to the mock or a specific mock method: Alias to $times(0)

$atLeast(minNumberOfInvocations,[methodName])

Boolean

Assert that at least a certain number of calls have been made on the mock or a specific mock method

$once([methodName])

Boolean

Assert that only 1 call has been made on the mock or a specific mock method

$atMost(maxNumberOfInvocations, [methodName])

Boolean

Assert that at most a certain number of calls have been made on the mock or a specific mock method.

$callLog()

struct

Retrieve the method call logger structure of all mocked method calls.

$reset()

void

Reset all mock counters and logs on the targeted mock.

$debug()

struct

Retrieve a structure of mocking debugging information about a mock object.

Method Name

Return Type

Description

$count([methodName])

Numeric

Get the number of times all mocked methods have been called on a mock or pass in a method name and get a the method's call count.

$times(count,[methodName]) or $verifyCallCount(count,[methodName])

Numeric

Assert how many calls have been made to the mock or a specific mock method

$never([methodName])

onSpecStart

Before a spec (it, test, then)

onSpecEnd

After a spec

// executes before all suites

function beforeAll(){}

// executes after all suites

function afterAll(){}function run( testResults, testBox ){

}describe( "Tests of TestBox behaviour", () => {

it( "rejects 5 as being between 1 and 10", () => {

expect( () => {

expect( 5 ).notToBeBetween( 1, 10 );

} ).toThrow();

} );

it( "rejects 10 as being between 1 and 10", () => {

expect( () => {

expect( 10 ).notToBeBetween( 1, 10 );

} ).toThrow();

} );

} );

feature( "Given-When-Then test language support", () => {

scenario( "I want to be able to write tests using Given-When-Then language", () => {

given( "I am using TestBox", () => {

when( "I run this test suite", () => {

then( "it should be supported", () => {

expect( true ).toBe( true );

} );

} );

} );

} );

} );

story( "I want to list all authors", () => {

given( "no options", () => {

then( "it can display all active system authors", () => {

var event = this.get( "/cbapi/v1/authors" );

expect( event.getResponse() ).toHaveStatus( 200 );

expect( event.getResponse().getData() ).toBeArray().notToBeEmpty();

event

.getResponse()

.getData()

.each( function( thisItem ){

expect( thisItem.isActive ).toBeTrue( thisItem.toString() );

} );

} );

} );

given( "isActive = false", () => {

then( "it should display inactive users", () => {

var event = this.get( "/cbapi/v1/authors?isActive=false" );

expect( event.getResponse() ).toHaveStatus( 200 );

expect( event.getResponse().getData() ).toBeArray().notToBeEmpty();

event

.getResponse()

.getData()

.each( function( thisItem ){

expect( thisItem.isActive ).toBeFalse( thisItem.toString() );

} );

} );

} );

given( "a search criteria", () => {

then( "it should display searched users", () => {

var event = this.get( "/cbapi/v1/authors?search=tester" );

expect( event.getResponse() ).toHaveStatus( 200 );

expect( event.getResponse().getData() ).toBeArray().notToBeEmpty();

} );

} );

} );toBeTrue( [message] ) : value to true

toBeFalse( [message] ) : value to be false

toBe( expected, [message] ) : Assert something is equal to each other, no case is required

toBeWithCase( expected, [message] ) : Expects with case

toBeNull( [message] ) : Expects the value to be null

toBeInstanceOf( class, [message] ) : To be the class instance passed

toMatch( regex, [message] ) : Matches a string with no case-sensitivity

toMatchWithCase( regex, [message] ) : Matches with case-sensitivity

toBeTypeOf( type, [message] ) : Assert the type of the incoming actual data, it uses the internal ColdFusion isValid() function behind the scenes, type can be array, binary, boolean, component, date, time, float, numeric, integer, query, string, struct, url, uuid plus all the ones from isValid()

toBe{type}( [message] ) : Same as above but more readable method name. Example: .toBeStruct(), .toBeArray()

toBeEmpty( [message] ) : Tests if an array or struct or string or query is empty

toHaveKey( key, [message] ) : Tests the existence of one key in a structure or hash map

toHaveDeepKey( key, [message] ) : Assert that a given key exists in the passed in struct by searching the entire nested structure

toHaveLength( length, [message] ) : Assert the size of a given string, array, structure or query

toThrow( [type], [regex], [message] );

toBeCloseTo( expected, delta, [datepart], [message] ) : Can be used to approximate numbers or dates according to the expected and delta arguments. For date ranges use the datepart values.

toBeBetween( min, max, [message] ) : Assert that the passed in actual number or date is between the passed in min and max values

toInclude( needle, [message] ) : Assert that the given "needle" argument exists in the incoming string or array with no case-sensitivity, needle in a haystack anyone?

toIncludeWithCase( needle, [message] ) : Assert that the given "needle" argument exists in the incoming string or array with case-sensitivity, needle in a haystack anyone?

toBeGT( target, [message] ) : Assert that the actual value is greater than the target value

toBeGTE( target, [message] ) : Assert that the actual value is greater than or equal the target value

toBeLT( target, [message] ) : Assert that the actual value is less than the target value

toBeLTE( target, [message] ) : Assert that the actual value is less than or equal the target valueBoolean $atLeast(minNumberOfInvocations,[methodname])// let's say we have a service that verifies user credentials

// and if not valid, then tries to check if the user can be inflated from a cookie

// and then verified again

function verifyUser(){

if( isValidUser() ){

log.info("user is valid, doing valid operations");

}

// check if user cookie exists

if( isUserCookieValid() ){

// inflate credentials

inflateUserFromCookie();

// Validate them again

if( NOT isValidUser() ){

log.error("user from cookie invalid, aborting");

}

}

}

// Now the test

it( "can verify a user", function(){

security = createMock("model.security").$("isValidUser",false);

security.storeUserCookie("invalid");

security.verifyUser();

// Asserts that isValidUser() has been called at least 5 times

expect( security.$atLeast(5,"isValidUser") ).toBeFalse();

// Asserts that isValidUser() has been called at least 2 times

expect( security.$atLeast(2,"isValidUser") ).toBeFalse();

});Boolean $once([methodname])// let's say we have a service that verifies user credentials

// and if not valid, then tries to check if the user can be inflated from a cookie

// and then verified again

function verifyUser(){

if( isValidUser() ){

log.info("user is valid, doing valid operations");

}

// check if user cookie exists

if( isUserCookieValid() ){

// inflate credentials

inflateUserFromCookie();

// Validate them again

if( NOT isValidUser() ){

log.error("user from cookie invalid, aborting");

}

}

}

// Now the test

it( "can verify a user", function(){

security = getMockBox().createMock("model.security").$("isValidUser",false);

security.storeUserCookie("valid");

security.verifyUser();

expect( security.$once("isValidUser") ).toBeTrue();

});struct $callLog()security = getMockBox().createMock("model.security");

//Call methods on it that perform something, but mock the saveUserState method, it returns void

security.$("saveUserState");

//get the call log for this method

userStateLog = security.$callLog().saveUserState;

expect( arrayLen(userStateLog) eq 0 ).toBeTrue();configBean.getKey('DebugMode');

configBean.getKey('OutgoingMail');//get a mock config bean

mockConfig = getMockBox().createEmptyMock("coldbox.system.beans.ConfigBean");

//mock the method for positional arguments

mockConfig.$("getKey").$args("debugmode").$results(true);

mockConfig.$("getKey").$args("OutgoingMail").$results('[email protected]');

//Then you can call and get the expected results//get a mock config bean

mockConfig = getMockBox().createEmptyMock("coldbox.system.beans.ConfigBean");

//mock the method for named arguments

mockConfig.$("getKey").$args(name="debugmode").$results(true);void function doSomething(foo){

// some code here then...

local.foo = variables.collaborator.callMe(local.foo);

variables.collaborator.whatever(local.foo);

}function test_it(){

local.mocked = createMock( "com.foo. collaborator" )

.$spy( "callMe" )

.$spy( "whatever" );

variables.CUT.$property( "collaborator", "variables", local.mocked );

assertEquals( 1, local.mocked.$count( "callMe" ) );

assertEquals( 1, local.mocked.$count( "whatever" ) );

}numeric $count([string methodName])mockUser = getMockBox().createMock("model.User");

mockUser.$("isAuthorized").$results(false,true);

debug(mockUser.$count("isAuthorized"));

//DUMPS 0

mockUser.isAuthorized();

debug(mockUser.$count("isAuthorized"));

//DUMPS 1

mockUser.isAuthorized();

debug(mockUser.$count("isAuthorized"));

//DUMPS 2

// dumps 2 also

debug( mockUser.$count() );void $reset()security = getMockBox().createMock("model.security").$("isValidUser", true);

security.isValidUser( mockUser );

// now clear out all call logs and test again

security.$reset();

mockUser.$property("authorized","variables",true);

security.isValidUser( mockUser );Unit testing is a software testing technique where individual components of a software application, known as units, are tested in isolation to ensure they work as intended. Each unit is a small application part, such as a function or method, and is tested independently from other parts. This helps identify and fix bugs early in the development process, ensures code quality, and facilitates easier maintenance and refactoring. Tools like TestBox allow developers to create and run automated unit tests, providing assertions to verify the correctness of the code.

TestBox supports xUnit style of testing, like in other languages, via the creation of classes and functions that denote the tests to execute. You can then evaluate the test either using assertions or the expectations library included with TestBox.

You will start by creating a test bundle (Usually with the word Test in the front or back), example: UserServiceTest or TestUserService.

This method can help you verify that at most a maximum number of calls have been made to all mocked methods or a specific mocked method.

Boolean $atLeast(minNumberOfInvocations,[methodname])Parameters:

maxNumberOfInvocations - The max number of calls to assert

methodName - The optional method name to assert the number of method calls

Examples:

// let's say we have a service that verifies user credentials

// and if not valid, then tries to check if the user can be inflated from a cookie

// and then verified again

function verifyUser(){

if( isValidUser() ){

log.info("user is valid, doing valid operations");

}

// check if user cookie exists

if( isUserCookieValid() ){

// inflate credentials

inflateUserFromCookie();

// Validate them again

if( NOT isValidUser() ){

log.error("user from cookie invalid, aborting");

}

}

}

// Now the test

it( "can verify a user", function(){

security = createMock("model.security").$("isValidUser",false);

security.storeUserCookie("valid");

security.verifyUser();

// Asserts that isValidUser() has been called at most 1 times

expect( security.$atMost(1,"isValidUser") ).toBeFalse();

});This method is used to tell MockBox that you want to mock a method with to throw a specific exception. The exception will be thrown instead of the method returning results. This is an alternative to passing the exception in the initial $() call. In addition to the fluent API, the $throws() method also has the benefit of being able to be tied to specific $args() in a mocked object.

To continue with our getKey() example:

configBean.getKey('DebugMode'); // Exists

configBean.getKey('OutgoingMail'); // Exists

configBean.getKey('IncmingMail'); // Does not exist (see the typo?)We want to test that keys that don't exists throw a MissingSetting exception. Let's do that using the $throws() method:

// get a mock config bean

mockConfig = getMockBox().createEmptyMock( "coldbox.system.beans.ConfigBean" );

// mock the method with args

mockConfig.$( "getKey" ).$args( "debugmode" ).$results( true );

mockConfig.$( "getKey" ).$args( "OutgoingMail" ).$results( "[email protected]" );

// Here's the new $throw call

mockConfig.$( "getKey" ).$args( "IncmingMail" ).$throws( type = "MissingSetting" );

// Then you can call and get the expected results

expect( function(){

mockConfig.getKey( "IncmingMail" );

} ).toThrow( "MissingSetting" );Hint Remember that the

$throws()call must be chained to a$()or a$args()call.

This method is used to assert how many times a mocked method has been called or ANY mocked method has been called.

Boolean $times(numeric count, [methodname])Parameters:

count - The number of times any method or a specific mocked method has been called

methodName - The optional method name to assert the number of method calls

Examples

security = getMockBox().createMock("model.security");

//No calls yet

expect( security.$times(0) ).toBeTrue();

security.$("isValidUser",false);

security.isValidUser();

// Asserts

expect( security.$times(1) ).toBeTrue();

expect( security.$times(1,"isValidUser") ).toBeTrue();

security.$("authenticate",true);

security.authenticate("username","password");

expect( security.$times(2) ).toBeTrue();

expect( security.$times(1,"authenticate") ).toBeTrue();nothing - If you just add the annotation, we will detect it and skip the test

true - Skips the test

false - Does not skip the test

{udf_name} - It will look for a UDF with that name, execute it and the value must evalute to boolean.

You can also skip manually by using the skip() method in the Assertion library and also in any bundle which is inherited by the BaseSpec class.

You can use the $assert.skip( message, detail ) method to skip any spec or suite a-la-carte instead of as an argument to the function definitions. This lets you programmatically skip certain specs and suites and pass a nice message.

The BaseSpec has this method available to you as well.

setup()You can pass a structure of key/value pairs of the assertions you would like to register. The key is the name of the assertion function and the value is the closure function representation.

After it is registered, then you can just use it out of the $assert object it got mixed into.

You can also store a plethora of assertions (Yes, I said plethora), in a class and register that as the assertions via its instantiation path. This provides much more flexibility and re-usability for your projects.

You can also register more than 1 class by using a list or an array:

Here is the custom assertions source:

function isAwesome( required expected ){

return ( arguments.expected == "TestBox" ? fail( 'TestBox is always awesome' ) : true );

}testbox.system.BaseSpec class, you will be able to execute the class directly via the URL:All the arguments found in the runner are available as well in a direct bundle execution:

labels: The labels to apply to the execution

testMethod : A list or array of xunit test names that will be executed ONLY!

testSuites : A list or array of suite names that are the ones that will be executed ONLY!

testSpecs : A list or array of test names that are the ones that will be executed ONLY!

reporter : The type of reporter to run the test with

AssertionassertHere are some common assertion methods:

// Normal method

$assert.isTrue()

$assert.between()

$assert.closeTo()

// With BoxLang Dynamic Methods

assertIsTrue()

assertBetween()

assertCloseTo()mock - The object or data to inject and mock

Not only can you mock properties that are objects, but also mock properties that are simple/complex types. Let's say you have a property in your target object that controls debugging and by default the property is false, but you want to test the debugging capabilities of your class. So we have to mock it to true now, but the property exists in variables.instance.debugMode? No problem mate (Like my friend Mark Mandel says)!

any $property(string propertyName, [string propertyScope='variables'], any mock)//decorate our user service with mocking capabilities, just to show a different approach

userService = getMockBox().prepareMock( createObject("component","model.UserService") );

//create a mock dao and mock the getUsers() method

mockDAO=getMockBox().createEmptyMock("model.UserDAO").$("getUsers",QueryNew(""));

//Inject it as a property of the user service, since no external injections are found. variables scope is the default.

userService.$property(propertyName="userDAO",mock=mockDAO);

//Test a user service method that uses the DAO

results = userService.getUsers();

assertTrue( isQuery(results) );//decorate the cache object with mocking capabilties

cache = getMockBox().createMock(object=createObject("component","MyCache"));

//mock the debug property

cache.$property(propertyName="debugMode",propertyScope="instance",mock=true);Caution Please note that the usage of the

expectedException()method can ONLY be used while in synchronous mode. If you are running your tests in asynchronous mode, this will not work. We would recommend the closure or annotation approach instead.

expect( function(){ myObj.method(); } ).toThrow( [type], [regex], [message] );

$assert.throws( function(){ myObj.method; }, [type], [regex], [message] )function testMyObj(){

expectedException( [type], [regex], [message] );

}

function testMyObj() expectedException="[type]:[regex]"{

// this function should produce an exception

}class{

// Called at the beginning of a test bundle cycle

function onBundleStart( target, testResults ){

}

// Called at the end of the bundle testing cycle

function onBundleEnd( target, testResults ){

}

// Called anytime a new suite is about to be tested

function onSuiteStart( target, testResults, suite ){

}

// Called after any suite has finalized testing

function onSuiteEnd( target, testResults, suite ){

}

// Called anytime a new spec is about to be tested

function onSpecStart( target, testResults, suite, spec ){

}

// Called after any spec has finalized testing

function onSpecEnd( target, testResults, suite, spec ){

}

}component labels="disk,os" extends="testbox.system.BaseSpec" {

/*********************************** LIFE CYCLE Methods ***********************************/

function beforeTests(){

application.salvador = 1;

}

function afterTests(){

structClear( application );

}

function setup(){

request.foo = 1;

}

function teardown(){

structDelete( request, "foo" );

}

/*********************************** Test Methods ***********************************/

function testFloatingPointNumberAddition() output="false"{

var sum = 196.4 + 196.4 + 180.8 + 196.4 + 196.4 + 180.8 + 609.6;

// sum.toString() outputs: 1756.8000000000002

// debug( sum );

// $assert.isEqual( sum, 1756.8 );

}

function testIncludes(){

$assert.includes( "hello", "HE" );

$assert.includes( [ "Monday", "Tuesday" ], "monday" );

}

function testIncludesWithCase(){

$assert.includesWithCase( "hello", "he" );

$assert.includesWithCase( [ "Monday", "Tuesday" ], "Monday" );

}

function testnotIncludesWithCase(){

$assert.notincludesWithCase( "hello", "aa" );

$assert.notincludesWithCase( [ "Monday", "Tuesday" ], "monday" );

}

function testNotIncludes(){

$assert.notIncludes( "hello", "what" );

$assert.notIncludes( [ "Monday", "Tuesday" ], "Friday" );

}

function testIsEmpty(){

$assert.isEmpty( [] );

$assert.isEmpty( {} );

$assert.isEmpty( "" );

$assert.isEmpty( queryNew( "" ) );

}

function testIsNotEmpty(){

$assert.isNotEmpty( [ 1, 2 ] );

$assert.isNotEmpty( { name : "luis" } );

$assert.isNotEmpty( "HelloLuis" );

$assert.isNotEmpty(

querySim(

"id, name

1 | luis"

)

);

}

function testSkipped() skip{

$assert.fail( "This Test should fail" );

}// Skips ALL the tests if the testEnv() returns TRUE

component displayName="TestBox xUnit suite" skip="testEnv"{

function setup(){

application.wirebox = new coldbox.system.ioc.Injector();

structClear( request );

}

function teardown(){

structDelete( application, "wirebox" );

structClear( request );

}

function betaTest() skip{

...

}

function testThrows() skip="true"{

$assert.throws(function(){

var hello = application.wirebox.getInstance( "myINvalidService" ).run();

});

}

function testNotThrows(){

$assert.notThrows(function(){

var hello = application.wirebox.getInstance( "MyValidService" ).run();;

});

}

private boolean function testEnv(){

return ( structKeyExists( request, "env") && request.env == "stg" ? true : false );

}

}function testThrows(){

$assert.skip()

$assert.throws(function(){

var hello = application.wirebox.getInstance( "myINvalidService" ).run()

})

}it( "can use a mocked stub", function(){

// If conditions met, then skip

if( !conditionsMet() ){

skip( "conditions for execution not met" )

}

c = createStub().$( "getData", 4 )

r = calc.add( 4, c.getData() )

expect( r ).toBe( 8 )

expect( c.$once( "getData" ) ).toBeTrue()

} );function beforeTests(){

addAssertions({

isAwesome = function( required expected ){

return ( arguments.expected == "TestBox" ? true : fail( 'not TestBox' ) );

},

isNotAwesome = function( required expected ){

return ( arguments.expected == "TestBox" ? fail( 'TestBox is always awesome' ) : true );

}

});

}function testAwesomenewss(){

$assert.isAwesome( 'TestBox' );

}addAssertions( "model.util.MyAssertions" );addAssertions( "model.util.MyAssertions, model.util.RegexAssertions" );

addAssertions( [ "model.util.MyAssertions" , "model.util.RegexAssertions" ] );component{

function assertIsAwesome( expected, actual ){

return ( expected eq actual ? true : false );

}

function assertIsFunky( actual ){

return ( actual gte 100 ? true : false );

}

}http://localhost/tests/runner.cfm<!--- Executes all tests in the 'specs' folder with simple reporter by default --->

<bx:param name="url.reporter" default="simple">

<bx:param name="url.directory" default="tests.specs">

<bx:param name="url.recurse" default="true" type="boolean">

<bx:param name="url.bundles" default="">

<bx:param name="url.labels" default="">

<bx:param name="url.excludes" default="">

<bx:param name="url.reportpath" default="#expandPath( "/tests/results" )#">

<bx:param name="url.propertiesFilename" default="TEST.properties">

<bx:param name="url.propertiesSummary" default="false" type="boolean">

<bx:param name="url.editor" default="vscode">

<bx:param name="url.bundlesPattern" default="*Spec*.cfc|*Test*.cfc|*Spec*.bx|*Test*.bx">

<!--- Code Coverage requires FusionReactor --->

<bx:param name="url.coverageEnabled" default="false">

<bx:param name="url.coveragePathToCapture" default="#expandPath( '/root' )#">

<bx:param name="url.coverageWhitelist" default="">

<bx:param name="url.coverageBlacklist" default="/testbox,/coldbox,/tests,/modules,Application.cfc,/index.cfm,Application.bx,/index.bxm">

<!---<bx:param name="url.coverageBrowserOutputDir" default="#expandPath( '/tests/results/coverageReport' )#">--->

<!---<bx:param name="url.coverageSonarQubeXMLOutputPath" default="#expandPath( '/tests/results/SonarQubeCoverage.xml' )#">--->

<!--- Enable batched code coverage reporter, useful for large test bundles which require spreading over multiple testbox run commands. --->

<!--- <bx:param name="url.isBatched" default="false"> --->

<!--- Include the TestBox HTML Runner --->

<bx:include template="/testbox/system/runners/HTMLRunner.cfm" >// BoxLang